Arnab A, Miksik O, Torr P H S. On the robustness of semantic segmentation models to adversarial attacks[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2018: 888-897.

1. Overview

1.1. Motivation

- adversarial examples has not been extensively studied on multiple, large-scale dataset and structureed prediction task

In this paper

- evaluate adversarial attacks on model semantic segmentation models, using two large-scale datasets

1.2. Observation

- DeeplabV2 more robust than other approach

- multiscale networks more robust to multiple different attacks and white-box attacks

- adversarial examples are less effective when processed at different scales

- input transformation only more robust to attack which not take these transformation into account

- mean filed CRF Inference increases robustness to untargeted adversarial attacks

2. Adversarial Examples

2.1. Solution

- y_t. target label

- c. positive scalar

- L-BFGS. expensive, require several minutes to produce a single attack

2.2. FGSM

- single-step, untargeted attack

- minimise the l_oo norm of the perturbation bounded by the parameter ε

2.3. FGSM II

- single-step, choose the target class as the least likely class predicted by network

2.4. Iterative FGSM

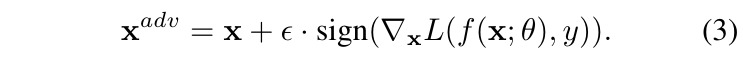

- iterative manner, untargeted

- clip(a,ε). make sure each element a_i of a in range [a_i -ε, a_i+ε]

- α. step-size

2.5. Iterative FGSM II

- iterative manner, targeted

- y_ll. least likely class predicted by the network

3. Adversarial Defenses and Evaluation

- training with adversarial examples generated by single-step methods conferred robustness to other single-step attacks with negligible performance difference to normally trained networks on clean inputs

- however, the adversarially trained network was still as vulnerable to iterative attacks as standard models

- currently, no effective defense to all adversarial attacks exist

- state-of-art methods should be preferred in settings where both accuracy and robustness are a priority

4. Experiments Set-up

- number of iteration. min(ε+4, ceil(1.25ε))

- step-size α=1

- l_oo of ε. {0.25, 0.5, 1, 2, 4, 8, 16, 32}

5. Experiments

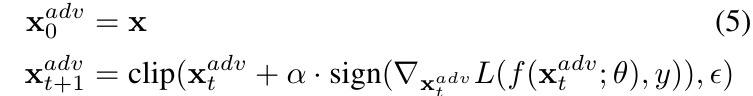

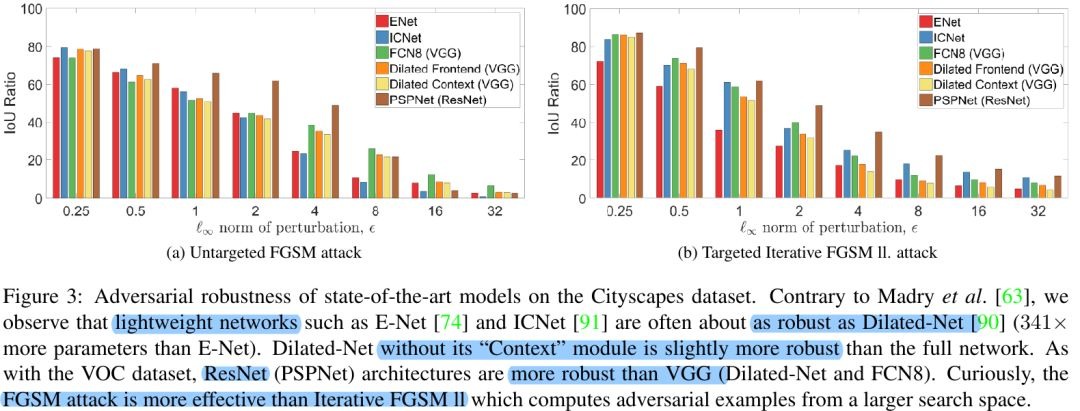

5.1. The Robustness of Different Networks

- resnet-based (skip-connection) more robust to single-step attack

- none perform well on iterative FGSM II attack, but tend not to transfer to other models, less useful in practical black-box attacks

5.2. Model Capacity and Residual Connections

- lightweight models are affected similarly as heavyweight models

- adding context module of Dilated-Net onto the Front-end slightly reducdce robustness across all ε values on Cityscapes

5.3. Multiscale Processing

- perturbations generated from the multiscale and 100% resolutions of Deeplabv2 transfer the best

- multiscale version Deeplabv2 is the most robust to white-box attacks

- FCN8 with multiscale inputs is more rbust to white-box attacks

- adversarial attacks generated at a single scale, are no longer as malignant when processed at another

- network process images at multiple scales more robust

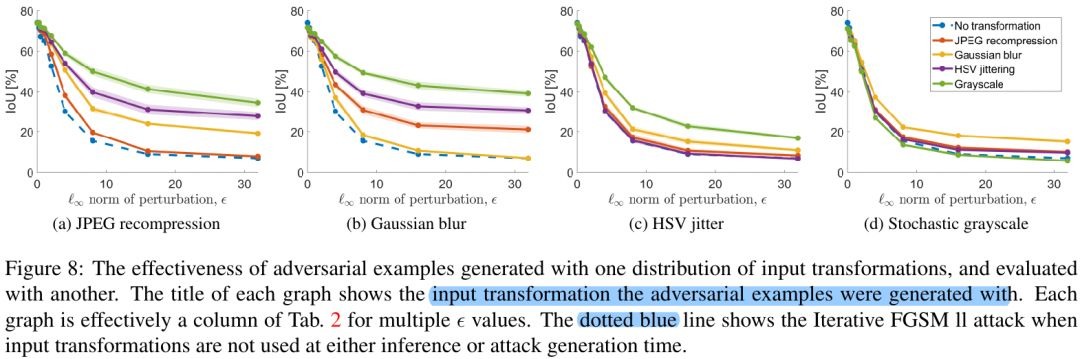

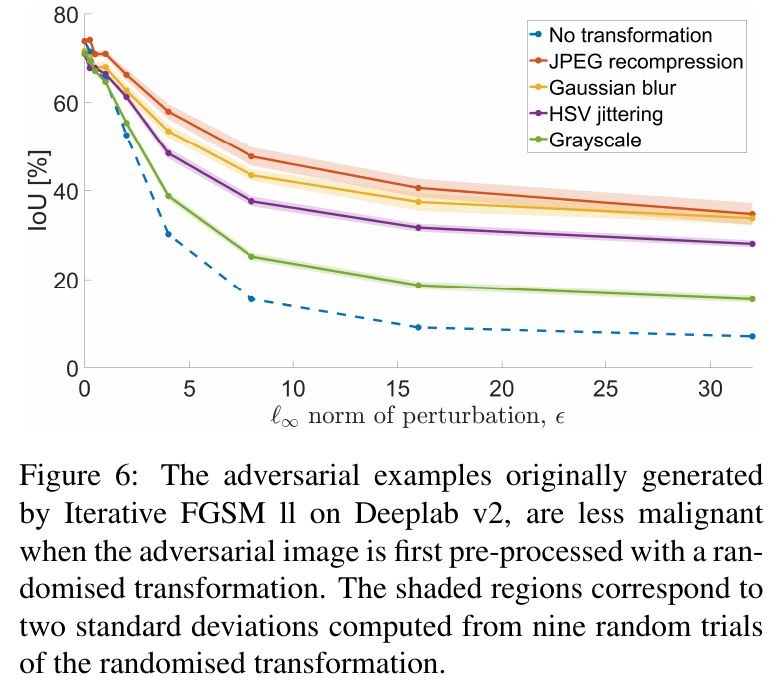

5.4. Input Transformation

- JPEG recompression

- Gaussian Blur

- HSV Jitter

- Grayscale

- JPEG and Gaussian Blur providing substantial benefits

5.5. Expectation over Transformation (EOT) Attack

- T. the distribution of transformation functions t

5.6. Transferability of Input Transformation